This course presents the fundamentals of Semantic Web technologies, i.e. how to represent knowledge and how to access and benefit from semantic data on the Web. It is lectured in English by Dr. Harald Sack, a senior researcher at the Hasso Plattner Institute of Potsdam, and is available on the openHPI platform.

This course is divided into 6 weeks, with about 4 hours per week of material (including the homework). An excursus is also offered at the middle of the course to present ways how to enrich simple websites with semantic metadata (through microformats, RDFa and schema.org). All in all, this course needs a bare minimum of 25 hours to be viewed, without adding any additional material, nor taking any quiz or exam. Each week or lecture contains about seven 15- to 20-minute videos followed by a short quiz. Each lecture is concluded by a one-hour limited homework with a due date. However, as this course was in the archive mode when I followed it, I had no access to the homework subjects, only to their solution. Finally, a 3-hour final exam concludes this course, but it is not accessible either in the archive mode.

Dr. Harald Sack, the lecturer of this course, is a Senior Researcher and Head of the Research Group Semantic Technologies at the Hasso Plattner Institute (Potsdam).

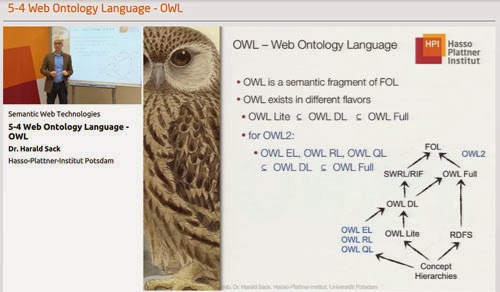

This course is aimed at explaining the whole stack of semantic web technologies. Starting from the history of the web, it shows its limits and how a semantic approach is needed to overpass them. But what does semantics means? The semiotic triangle explains it all: a symbol symbolizes a concept, which refers to an object, and the symbol stands for this object. Then we are introduced to the RDF and RDF Schema formalisms to represent these triplets of data, from graph representation to plain XML and Turtle syntax. After having been shown how to create some small knowledge bases where we can infer implicit data from the explicit ones, we are taught how to go further by querying these bases with SPARQL, which is quite a bit more than a simple query language. The problem of storing the RDF(S) data is illustrated with some triplestores implementations based on RDBMS. Nevertheless, RDF(S) is still lacking some semantic expressivity. We are presented with the history of ontology in philosophy, then in knowledge representation, and introduced to both propositional logic and first order logic, and to some algorithms to solve them (resolution through Clausal Form and Tableaux). We then go through Description Logic, the Web Ontology Language OWL (and more specifically its OWL2 DL flavor, which is very expressive and yet still decidable), and rules (including Datalog and a few words about SWRL and RIF). After having done ontology hacking, we need to do ontology engineering, i.e. to use methodologies to design, map and merge ontologies, as ontologies need, like other projects, to be managed, scheduled, developed, controled, QA’ed, maintained… The 101 process is explained, and the unified process and Design Patterns are briefly talked about. The Open Linked Data is also presented with ways to interact with it, as well as the named entity recognition problem applied to it, which leads to the different approaches of semantic search, and particularly of exploratory search.

This course has some requirements, namely a basic knowledge of web technologies (URL, http, HTML, XML), database technology (relational databases and SQL query language), and logics (propositional logics and first order logics).

The excursus about implementing semantic data within HTML code is quite interesting, especially after having learned about RDF before. Otherwise (and this is a personal comment), I do not think it very useful from an SEO point of view. As even if Google can show some so-called “rich” snippets in its result page (picture of the author, ratings, breadcrumbs…) from the machine-readable data we can enrich our content with, it can also simply ignore it or use it for its own purposes, like hoarding free semantic data to display it at will on its own result page where the users will get all the data they need without visiting the sites they come from. In that sense, providing semantic metadata is like working for the machine, as these content tidbits can easily be detached from their original content (and context). On the other hand, these machine-understandable data may also be the very reason a specific page is returned in the first results of the SERPs.

Another interesting point is the semantic exploratory search already implemented on Google first result page, which also illustrates the previous point. As soon as a named entity is recognised in the query field of the search engine, a result from the knowledge graph is displayed on the right side of the page, including data from Wikipedia (and its semantic counterpart DBpedia) and other sources, like facts, books, movies, etc. The users are guided in their (exploratory) search, but the sites providing these pieces of information are not visited anymore, which can kill their business model, and Google can be seen as the ultimate predator of the data chain (which does not mean a large-sized exploratory search engine would not benefit the users by supporting queries a keyword-based search engine is not able to).

This is also a good illustration of the fact that the current web ecosystem has developed a set of rules that have perverted the idea at its inception. Data is not free, and most of it is created at a price, or to earn something through it, whether it is recognition, traffic, or advertising fees. The Open Data movement is emblematic of a fight to free any data gathered through public funding, and Wikipedia can be seen as something of a resurgence of the Age of Enlightenment in a collaborative way, but the rest of the web is mostly driven by economic forces. We now have a huge amount of data (“vastly, hugely, mind-bogglingly big”) at the tip of our hands, but the very search engines that help us navigate through it also dictate the way we consume it. Is the semantic web the future of the web, or just a way for the big players to come up with organizing the avalanche of data to their own ends?

A Developer’s Guide to the Semantic Web, by Liyang Yu, Springer (2011)

Programming the Semantic Web, by Toby Segaran, Colin Evans & Jamie Taylor, O’Reilly Media (2009)

Course overview

This course is divided into 6 weeks, with about 4 hours per week of material (including the homework). An excursus is also offered at the middle of the course to present ways how to enrich simple websites with semantic metadata (through microformats, RDFa and schema.org). All in all, this course needs a bare minimum of 25 hours to be viewed, without adding any additional material, nor taking any quiz or exam. Each week or lecture contains about seven 15- to 20-minute videos followed by a short quiz. Each lecture is concluded by a one-hour limited homework with a due date. However, as this course was in the archive mode when I followed it, I had no access to the homework subjects, only to their solution. Finally, a 3-hour final exam concludes this course, but it is not accessible either in the archive mode.

Dr. Harald Sack, the lecturer of this course, is a Senior Researcher and Head of the Research Group Semantic Technologies at the Hasso Plattner Institute (Potsdam).

Content

This course is aimed at explaining the whole stack of semantic web technologies. Starting from the history of the web, it shows its limits and how a semantic approach is needed to overpass them. But what does semantics means? The semiotic triangle explains it all: a symbol symbolizes a concept, which refers to an object, and the symbol stands for this object. Then we are introduced to the RDF and RDF Schema formalisms to represent these triplets of data, from graph representation to plain XML and Turtle syntax. After having been shown how to create some small knowledge bases where we can infer implicit data from the explicit ones, we are taught how to go further by querying these bases with SPARQL, which is quite a bit more than a simple query language. The problem of storing the RDF(S) data is illustrated with some triplestores implementations based on RDBMS. Nevertheless, RDF(S) is still lacking some semantic expressivity. We are presented with the history of ontology in philosophy, then in knowledge representation, and introduced to both propositional logic and first order logic, and to some algorithms to solve them (resolution through Clausal Form and Tableaux). We then go through Description Logic, the Web Ontology Language OWL (and more specifically its OWL2 DL flavor, which is very expressive and yet still decidable), and rules (including Datalog and a few words about SWRL and RIF). After having done ontology hacking, we need to do ontology engineering, i.e. to use methodologies to design, map and merge ontologies, as ontologies need, like other projects, to be managed, scheduled, developed, controled, QA’ed, maintained… The 101 process is explained, and the unified process and Design Patterns are briefly talked about. The Open Linked Data is also presented with ways to interact with it, as well as the named entity recognition problem applied to it, which leads to the different approaches of semantic search, and particularly of exploratory search.

Requirements

This course has some requirements, namely a basic knowledge of web technologies (URL, http, HTML, XML), database technology (relational databases and SQL query language), and logics (propositional logics and first order logics).

Benefits and other thoughts

The excursus about implementing semantic data within HTML code is quite interesting, especially after having learned about RDF before. Otherwise (and this is a personal comment), I do not think it very useful from an SEO point of view. As even if Google can show some so-called “rich” snippets in its result page (picture of the author, ratings, breadcrumbs…) from the machine-readable data we can enrich our content with, it can also simply ignore it or use it for its own purposes, like hoarding free semantic data to display it at will on its own result page where the users will get all the data they need without visiting the sites they come from. In that sense, providing semantic metadata is like working for the machine, as these content tidbits can easily be detached from their original content (and context). On the other hand, these machine-understandable data may also be the very reason a specific page is returned in the first results of the SERPs.

Another interesting point is the semantic exploratory search already implemented on Google first result page, which also illustrates the previous point. As soon as a named entity is recognised in the query field of the search engine, a result from the knowledge graph is displayed on the right side of the page, including data from Wikipedia (and its semantic counterpart DBpedia) and other sources, like facts, books, movies, etc. The users are guided in their (exploratory) search, but the sites providing these pieces of information are not visited anymore, which can kill their business model, and Google can be seen as the ultimate predator of the data chain (which does not mean a large-sized exploratory search engine would not benefit the users by supporting queries a keyword-based search engine is not able to).

This is also a good illustration of the fact that the current web ecosystem has developed a set of rules that have perverted the idea at its inception. Data is not free, and most of it is created at a price, or to earn something through it, whether it is recognition, traffic, or advertising fees. The Open Data movement is emblematic of a fight to free any data gathered through public funding, and Wikipedia can be seen as something of a resurgence of the Age of Enlightenment in a collaborative way, but the rest of the web is mostly driven by economic forces. We now have a huge amount of data (“vastly, hugely, mind-bogglingly big”) at the tip of our hands, but the very search engines that help us navigate through it also dictate the way we consume it. Is the semantic web the future of the web, or just a way for the big players to come up with organizing the avalanche of data to their own ends?

Suggested reading

A Developer’s Guide to the Semantic Web, by Liyang Yu, Springer (2011)

Programming the Semantic Web, by Toby Segaran, Colin Evans & Jamie Taylor, O’Reilly Media (2009)

Technologies du web sémantique (MOOC) (in French)

Tecnologías de la web semántica (MOOC) (in Spanish)

Tecnologias da Web Semântica (MOOC) (in Portuguese)

The course will be repeated this year, starting on May, 26th. Enrollment is open on https://openhpi.de/courses/semanticweb2014

ReplyDelete